Batch operating systems, the unsung heroes of computing’s past, are far from obsolete. This deep dive explores their history, from the punch card days to their surprisingly relevant modern applications. We’ll unravel the mysteries of Job Control Language (JCL), dissect scheduling algorithms, and explore the surprisingly complex world of I/O management in these systems. Get ready to discover why batch processing, despite its age, continues to power critical applications.

We’ll cover the core concepts, examining their evolution and comparing them to more modern OS paradigms like time-sharing and real-time systems. We’ll then dive into the nitty-gritty details of JCL, exploring its syntax, structure, and practical applications. We’ll also analyze the advantages and disadvantages of batch processing, examining its efficiency across various applications. Finally, we’ll look at modern uses and future trends, showcasing the enduring legacy of this foundational technology.

Definition and History of Batch Operating Systems

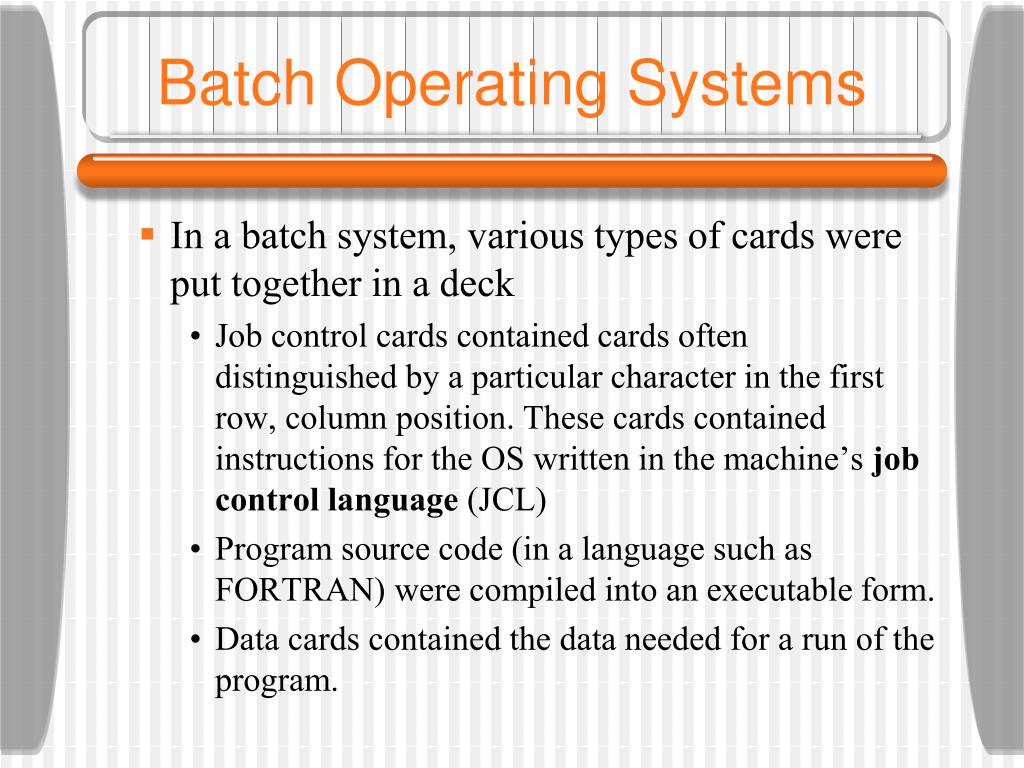

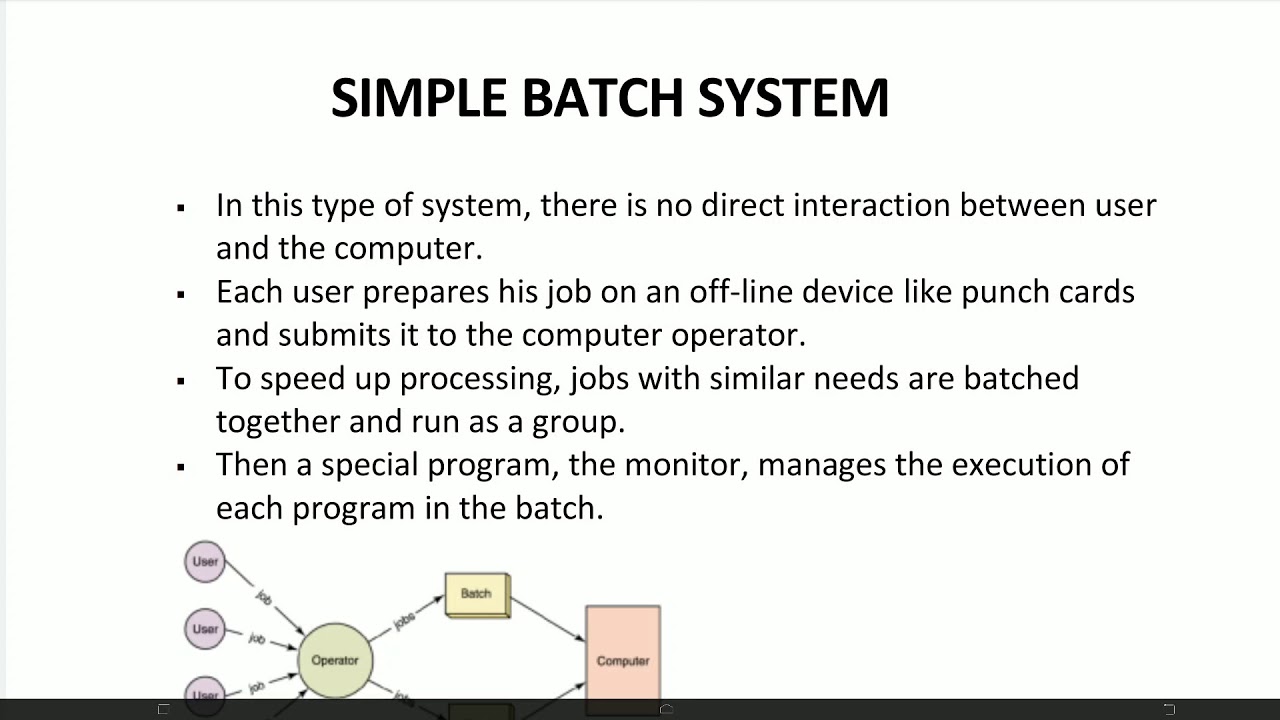

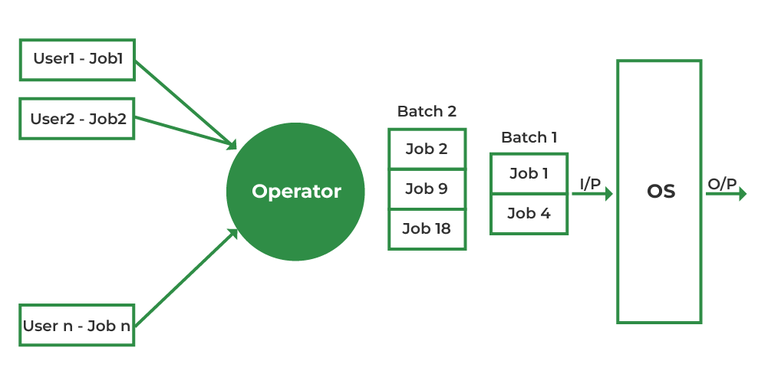

Batch operating systems represent a foundational paradigm in computer science, enabling the execution of multiple jobs sequentially without direct human intervention. Essentially, a batch OS gathers a collection of jobs – programs or tasks – and processes them in a predefined order, one after another, improving efficiency compared to earlier, single-job systems. This automated approach minimizes idle time and maximizes resource utilization, a significant leap forward in early computing.Batch processing fundamentally altered how computers were used.

Instead of a single user directly interacting with the machine, jobs were submitted in batches – often via punched cards or magnetic tape – and the OS would manage their execution. This removed the need for constant human supervision, allowing for more efficient use of expensive computing resources. The transition from manual, single-job operation to automated batch processing marked a critical turning point in the evolution of computing.

Evolution of Batch Processing

The evolution of batch processing reflects the technological advancements in hardware and software. Early batch systems relied on simple control cards to specify job parameters, such as input/output devices and program locations. As computers became more powerful and storage capacities increased, batch systems incorporated more sophisticated features like job scheduling algorithms to optimize resource allocation and improve throughput. Modern batch processing, while still adhering to the fundamental concept of sequential job execution, leverages advanced techniques like parallel processing and distributed computing to handle significantly larger workloads.

The transition from punched cards to sophisticated job control languages and distributed systems highlights the remarkable evolution of this core OS paradigm.

Significant Milestones in Batch OS Development

The development of batch operating systems was a gradual process, marked by several key innovations. Early examples, like the IBM 704’s IBSYS (Input/Output Batch System), laid the groundwork for more advanced systems. The development of job control languages (JCLs) like those used in IBM’s OS/360 significantly simplified job submission and management. These JCLs allowed users to specify complex job parameters through a standardized set of commands, automating many aspects of the batch processing workflow.

The introduction of spooling (Simultaneous Peripheral Operations On-Line) further enhanced efficiency by allowing input and output operations to overlap with processing, minimizing I/O bottlenecks. Later developments focused on improved scheduling algorithms, resource allocation strategies, and the integration of batch processing with other operating system functionalities. This continuous refinement led to the highly efficient and robust batch processing capabilities found in many modern systems.

Comparison of Batch Processing with Other Operating System Paradigms

Batch processing contrasts sharply with other operating system paradigms. Time-sharing systems, for example, allow multiple users to interact with the computer concurrently, sharing its resources. This interactive approach provides immediate feedback, unlike the delayed response inherent in batch processing. Real-time operating systems (RTOS) prioritize immediate response to external events, crucial for applications like process control and robotics. Unlike batch systems, RTOSs are designed to meet strict timing constraints.

While batch processing excels at efficiently handling large volumes of independent jobs, time-sharing and real-time systems prioritize interactive responsiveness and immediate event handling, respectively. Each paradigm serves distinct needs, and the choice depends on the specific application requirements.

Job Control Language (JCL) and its Role

Job Control Language, or JCL, is the unsung hero of batch processing. Think of it as the instruction manual for your batch job – it tells the operating system exactly what to do, when to do it, and what resources it needs. Without JCL, the entire batch system would be a chaotic mess of programs vying for attention. It’s the crucial link between your programs and the operating system, ensuring smooth and efficient execution of your batch jobs.JCL acts as the intermediary between the user and the batch operating system.

It’s a set of commands and directives that specify everything from the input and output files to the specific programs to be executed and the resources required for the process. Essentially, it’s a highly structured script that orchestrates the entire batch processing workflow. This eliminates the need for manual intervention during the execution of the batch job, making it ideal for automated, large-scale processing tasks.

JCL Syntax and Structure

A typical JCL statement follows a specific format. It begins with a job statement (//JOB), followed by execution statements (//STEP), and ends with a job statement (//). Each statement consists of several fields, typically separated by blanks. Key fields include the job name, step name, program name, input and output file names, and resource allocation requests (like memory or time).

Errors in JCL syntax can halt the entire job, emphasizing the importance of accurate coding. For example, a missing or misspelled can cause a fatal error. The system reads and interprets these statements sequentially, carrying out the specified instructions one after another.

Examples of JCL Statements for Different Tasks

Let’s illustrate with some simplified examples. Remember, actual JCL syntax varies slightly depending on the specific batch operating system.For file manipulation (copying a file):“`jcl//STEP1 EXEC PGM=IEBCOPY//SYSPRINT DD SYSOUT=*//SYSIN DDCOPY INFILE OUTFILE/*//INFILE DD DSN=MY.INPUT.FILE,DISP=SHR//OUTFILE DD DSN=MY.OUTPUT.FILE,DISP=(NEW,CATLG),SPACE=(CYL,(1,1))“`This JCL copies `MY.INPUT.FILE` to `MY.OUTPUT.FILE`. `IEBCOPY` is the utility program, `SYSPRINT` directs output to the console, `SYSIN` contains the copy command, and the DD statements define the input and output files.For program execution:“`jcl//STEP1 EXEC PGM=MYPROGRAM//INPUT DD DSN=MY.INPUT.DATA,DISP=SHR//OUTPUT DD DSN=MY.OUTPUT.DATA,DISP=(NEW,CATLG),SPACE=(CYL,(1,1))“`This JCL executes the program `MYPROGRAM`, using `MY.INPUT.DATA` as input and writing the output to `MY.OUTPUT.DATA`.

Hypothetical JCL Script for a Specific Batch Processing Scenario

Imagine a scenario where we need to process a large dataset, sort it, and then generate a report. This would involve multiple steps:“`jcl//SORTJOB JOB (ACCTNUM),’SORT AND REPORT GENERATION’,MSGCLASS=X,MSGLEVEL=(1,1)//STEP1 EXEC PGM=SORT,REGION=2048K//SORTIN DD DSN=RAWDATA.DATASET,DISP=SHR//SORTOUT DD DSN=SORTEDDATA.DATASET,DISP=(NEW,CATLG),SPACE=(CYL,(1,1))//STEP2 EXEC PGM=REPORTGEN,REGION=1024K//INPUT DD DSN=SORTEDDATA.DATASET,DISP=SHR//OUTPUT DD SYSOUT=*“`This JCL first sorts `RAWDATA.DATASET` using the `SORT` utility and stores the result in `SORTEDDATA.DATASET`. Then, `REPORTGEN` processes the sorted data and prints the report to the console.

Note the resource allocation (`REGION`) specified for each step.

Advantages and Disadvantages of Batch Processing

Batch processing, a cornerstone of early computing, offers a unique set of advantages and disadvantages compared to more modern interactive systems. Understanding these trade-offs is crucial for determining when this approach is the most appropriate solution. While seemingly outdated in our always-on, real-time world, batch processing remains a vital tool in many contexts.

Advantages of Batch Processing

Batch processing shines in situations requiring high throughput and minimal human intervention. Its strengths lie in its efficiency when dealing with large volumes of similar tasks.

- High Throughput: Batch systems excel at processing large amounts of data efficiently. They can handle numerous jobs concurrently without requiring constant user interaction, maximizing resource utilization.

- Reduced Human Intervention: Once a batch job is submitted, it runs autonomously, freeing up human operators to focus on other tasks. This minimizes errors caused by manual intervention.

- Cost-Effectiveness: By automating repetitive tasks, batch processing reduces labor costs and improves overall operational efficiency, especially beneficial for routine processes like payroll or billing.

- Improved Resource Utilization: Batch jobs can be scheduled during off-peak hours, optimizing the use of computing resources and minimizing system bottlenecks.

- Suitable for Non-Interactive Tasks: Tasks that don’t require immediate user response, such as nightly backups, data processing, and report generation, are ideally suited for batch processing.

Disadvantages of Batch Processing

Despite its advantages, batch processing has limitations that make it unsuitable for certain applications. Its rigid structure and lack of real-time interaction can be significant drawbacks.

- Lack of Interactivity: Users cannot interact with the system during job execution. This means immediate feedback or error correction is impossible.

- Longer Turnaround Time: Jobs must wait in a queue until their turn for processing, resulting in potentially long delays before results are available. This delay can be problematic for time-sensitive applications.

- Debugging Challenges: Identifying and correcting errors in batch jobs can be more complex than in interactive systems, as real-time debugging isn’t possible.

- Limited Error Handling: Error handling in batch systems is often less sophisticated than in interactive environments. Errors may only be detected after the job has completed, potentially leading to wasted resources.

- Inflexibility: Batch jobs are typically rigid and require careful planning and preparation. Adapting to unexpected changes or requests during execution is difficult.

Batch Processing Suitability

Batch processing finds its niche in specific application areas where its strengths outweigh its limitations.

Batch processing is exceptionally well-suited for tasks that are: repetitive, non-interactive, and involve large datasets. Examples include payroll processing, generating monthly reports, nightly database backups, and large-scale scientific simulations. In these scenarios, the efficiency gains from automated, scheduled processing far outweigh the drawbacks of a lack of immediate feedback.

Okay, so batch operating systems, right? They’re all about processing jobs sequentially, one after the other. Think of it like a super-efficient workflow, which is kinda what you need when working on massive projects in something like autocad civil 3d , where you might be running multiple complex calculations. Batch processing helps streamline those tasks, making the whole process way more manageable for your computer.

It’s all about maximizing efficiency, even with really intense programs.

Comparison of Batch and Interactive Processing

The choice between batch and interactive processing depends heavily on the application’s requirements.

Interactive processing excels in situations demanding immediate user feedback and response, such as word processing, online gaming, and web browsing. Its real-time nature allows for dynamic interaction and quick adjustments. Batch processing, conversely, prioritizes efficiency and throughput for non-interactive, high-volume tasks. Consider a payroll system: batch processing efficiently handles salary calculations for thousands of employees overnight, while an interactive system would be inefficient and prone to errors when dealing with such scale.

Similarly, scientific simulations involving extensive computations are far better suited to batch processing, while designing a webpage is more effectively done interactively.

Scheduling Algorithms in Batch Systems

Batch operating systems, while seemingly simple, rely heavily on efficient scheduling algorithms to maximize throughput and minimize waiting times. The choice of algorithm significantly impacts system performance, particularly under varying workloads. Understanding these algorithms is crucial for optimizing batch processing environments.

First-In, First-Out (FIFO) Scheduling

FIFO, the simplest scheduling algorithm, processes jobs in the order they arrive. Think of it like a queue at a bakery – the first person in line gets served first. While straightforward to implement, FIFO can suffer from significant drawbacks, especially with a mix of short and long jobs. A long job arriving early can block shorter jobs, leading to increased average waiting time.

For example, if a 10-minute job arrives before a 1-minute job, the 1-minute job will have to wait the entire 10 minutes, even though it could have been completed much sooner.

Shortest Job First (SJF) Scheduling

SJF prioritizes jobs with the shortest estimated processing time. This algorithm aims to minimize the average waiting time by tackling smaller tasks first. However, it requires accurate estimations of job lengths, which can be challenging in practice. Imagine a print shop; SJF would prioritize smaller print jobs over larger ones to get more jobs completed quickly. While this reduces average wait time, it can lead to longer turnaround times for larger jobs.

Priority Scheduling

Priority scheduling assigns each job a priority level, and the system processes jobs with higher priorities first. This allows for the prioritization of critical or time-sensitive tasks. Priorities can be assigned based on various factors, such as job type, user importance, or deadlines. For instance, a hospital’s patient monitoring system might have higher priority than a routine backup job.

The downside is that low-priority jobs might experience significant delays, potentially leading to starvation if high-priority jobs continuously arrive.

Comparison of Scheduling Algorithms

The performance of these algorithms varies depending on the workload characteristics. FIFO performs well with homogenous workloads (jobs of similar length), while SJF excels in minimizing average waiting time with a mix of job lengths. Priority scheduling is effective for handling critical tasks but can lead to unfairness if not managed properly.

| Algorithm | Average Waiting Time | Throughput | Implementation Complexity |

|---|---|---|---|

| FIFO | Can be high with varying job lengths | Moderate | Low |

| SJF | Generally low | Moderate to High | Medium |

| Priority | Varies greatly depending on priority assignment | Varies greatly depending on priority assignment | Medium to High |

Simulation of Scheduling Algorithms

A simple simulation can demonstrate the effects of different scheduling algorithms. Imagine a system with three jobs: Job A (5 units of processing time), Job B (2 units), and Job C (8 units).Under FIFO, the order would be A-B-C, resulting in completion times of 5, 7, and 15 units respectively. Total waiting time: 12 units.Under SJF, the order would be B-A-C, resulting in completion times of 2, 7, and 15 units respectively.

Total waiting time: 7 units.Under Priority (A=high, B=medium, C=low), if priority is the only factor, the order would be A-B-C, resulting in completion times of 5, 7, and 15 units respectively. Total waiting time: 12 units. This highlights how the priority algorithm doesn’t always guarantee the lowest waiting time. This simple simulation shows how different algorithms can lead to significantly different waiting times and overall system performance.

More complex simulations with larger datasets and varying job arrival times would provide a more comprehensive analysis.

Input/Output Management in Batch Systems: Batch Operating System

Batch systems, unlike interactive ones, deal with a queue of jobs submitted offline. This necessitates robust and efficient mechanisms for handling input and output operations, minimizing idle time and maximizing throughput. The core challenge lies in coordinating the flow of data between the jobs and the various I/O devices, often involving significant delays inherent to mechanical devices.

Input/Output Mechanisms in Batch Environments

Batch systems employ specialized software to manage the transfer of data between jobs and peripheral devices like card readers, tape drives, and printers. This involves buffering techniques to smooth out the speed differences between the CPU and slower I/O devices. For instance, a job might write its output to a temporary buffer in memory before being transferred to a printer, preventing the CPU from being held up while the printer mechanically prints.

Similarly, input from a card reader might be pre-read and stored in memory, feeding the CPU data as needed. The system carefully sequences these operations to ensure efficient resource utilization. Sophisticated error handling routines are also crucial; if a printer malfunctions, the system needs to gracefully handle the situation, perhaps rerouting the output to another device or queuing the job for later processing.

The Role of Spooling in Optimizing I/O Efficiency

Spooling (Simultaneous Peripheral Operations On-Line) is a cornerstone of efficient I/O management in batch systems. It uses a dedicated area on disk, often referred to as a spool, to temporarily store input and output data. Instead of directly connecting a job to an I/O device, the job interacts with the spool. Input files are copied from their original location to the input spool before being processed.

Output from a job is written to the output spool and then transferred to the appropriate device (e.g., printer) at a later time by a dedicated spooler process. This decoupling allows the CPU to continue processing other jobs without being hindered by the slower speeds of I/O devices. For example, a long-running computation can write its output to the spool and then proceed to the next phase without waiting for the printer to finish.

Managing Input/Output Devices in a Batch System

Managing I/O devices in a batch environment requires a sophisticated approach. The system needs to track the status of each device (busy/idle), handle device errors (paper jams, tape errors), and manage device allocation. A device driver is assigned to each device type, providing a consistent interface for the operating system to interact with diverse hardware. The operating system maintains a device table, containing information about each connected device, its current status, and the jobs currently using it.

This allows for efficient allocation and prevents conflicts where multiple jobs attempt to access the same device simultaneously. Prioritization schemes might be implemented, giving preference to certain jobs or devices based on pre-defined criteria. For instance, a high-priority job might be given access to a fast printer even if another job is already waiting.

Flowchart of I/O Management in a Batch OS

[The following describes a flowchart. Visual representation would be beneficial but is outside the scope of this text-based response.]The flowchart begins with a job entering the system. It then proceeds to an “Input Spool” stage, where the job’s input is copied to the disk spool. Next, the job enters the CPU for processing. During processing, output is written to an “Output Spool”.

After processing, the job moves to a “Device Allocation” stage, where the spooler process assigns the output to an available device (printer, tape drive, etc.). Finally, the output is transferred to the assigned device, and the job is considered complete. Error handling is integrated at each stage, with pathways to handle issues such as device malfunctions or insufficient spool space.

The system also monitors device status and job priorities throughout the process.

Error Handling and Recovery in Batch Jobs

Batch processing, while efficient for large-scale tasks, is not immune to errors. Understanding how these errors manifest, how they’re detected, and how to recover from them is crucial for maintaining system stability and data integrity. Effective error handling prevents job failures, minimizes downtime, and ensures reliable processing of large volumes of data.Error detection and recovery mechanisms are integral parts of a robust batch processing system.

These mechanisms range from simple checks for invalid input data to complex procedures for restarting jobs from checkpoints. The sophistication of these mechanisms depends on the complexity of the batch jobs and the criticality of the data being processed.

Common Error Conditions in Batch Processing, Batch operating system

Several common error conditions can disrupt the smooth execution of batch jobs. These include input/output errors (e.g., a file not found, insufficient disk space, network connectivity issues), processing errors (e.g., arithmetic overflow, division by zero, invalid data encountered during processing), and system errors (e.g., memory allocation failures, operating system crashes). The severity and impact of these errors vary depending on their nature and the stage of the job at which they occur.

Effective error handling requires a layered approach, incorporating checks at various points in the processing pipeline.

Error Detection Mechanisms

Batch systems employ various mechanisms to detect errors. These include built-in operating system checks (like file access permissions and memory management), program-level error handling (using exception handling in programming languages), and job control language (JCL) directives that specify error handling routines. For instance, a JCL statement might specify that if a particular step fails, a specific recovery procedure should be initiated.

Regular logging of job execution, including error messages, is also critical for post-mortem analysis and debugging.

Error Recovery Procedures

Recovering from errors in batch jobs involves a combination of automated and manual procedures. Automated recovery might involve retrying a failed operation a certain number of times, switching to a backup data source, or executing an alternative processing path. Manual recovery, on the other hand, often requires human intervention to diagnose the problem, correct the error condition, and restart the job.

Checkpointing, where the state of the job is periodically saved, allows for resuming from the last saved point rather than restarting from the beginning. This significantly reduces processing time in case of errors.

Error Types, Causes, and Recovery Strategies

| Error Type | Cause | Recovery Strategy |

|---|---|---|

| Input File Not Found | Incorrect file path or filename specified in JCL. | Verify file path and filename. Resubmit the job with the correct information. |

| Insufficient Disk Space | Output files exceed available disk space. | Free up disk space or use a different output location. Resubmit the job. |

| Arithmetic Overflow | Calculation results exceed the maximum representable value. | Modify the program to handle larger values or use more appropriate data types. Resubmit the job. |

| Invalid Data Encountered | Input data contains unexpected values or formats. | Identify and correct the invalid data in the input file. Resubmit the job. |

| System Crash | Operating system failure. | Restart the operating system and resubmit the job. Checkpointing may allow resuming from a saved point. |

Security Considerations in Batch Systems

Batch processing, while efficient for large-scale data operations, presents unique security challenges. The inherent automation and often limited human interaction during execution increase the risk of unauthorized access, data breaches, and system compromise if not properly secured. Robust security measures are crucial to protect sensitive data and maintain system integrity in batch environments.

Potential Security Risks in Batch Processing

Batch systems, due to their automated nature, can be vulnerable to various security threats. Malicious actors might exploit vulnerabilities in the system to inject harmful code, alter data, or gain unauthorized access to sensitive information. For example, a compromised batch job could potentially delete crucial files, modify financial records, or even launch attacks against other systems. Another risk is the lack of real-time monitoring, which makes it harder to detect and respond to attacks promptly.

Data breaches can go unnoticed for extended periods, potentially leading to significant damage.

Data Integrity and Confidentiality in Batch Environments

Maintaining data integrity and confidentiality is paramount in batch systems. This involves implementing strong access controls, data encryption, and regular audits. Data encryption, both in transit and at rest, protects sensitive information from unauthorized access even if a breach occurs. Regular audits help identify anomalies and potential security weaknesses. Using digital signatures to verify the authenticity and integrity of batch jobs and their input/output data adds another layer of protection.

For instance, a financial institution processing payroll data would utilize encryption to protect employee salaries and digital signatures to verify the legitimacy of the payroll batch job itself.

Access Control Mechanisms for Batch Jobs and Data

Effective access control is vital for securing batch systems. This involves implementing role-based access control (RBAC) to restrict access to specific batch jobs and data based on user roles and permissions. Users should only have access to the data and jobs they absolutely need for their tasks. Strong password policies, multi-factor authentication, and regular password changes should be enforced.

Auditing access logs helps track user activities and identify potential security violations. For example, a database administrator might have full access to the batch jobs related to database maintenance, while a regular employee might only have read-only access to specific batch job outputs.

Security Measures in Modern Batch Systems

Modern batch systems incorporate various security enhancements. These include robust authentication mechanisms, integrated security monitoring tools, and intrusion detection systems. These systems often leverage encryption technologies to protect data both in transit and at rest, employing advanced algorithms to ensure confidentiality. Regular security audits and vulnerability assessments are conducted to identify and mitigate potential weaknesses. For example, many modern cloud-based batch processing services incorporate features like data encryption at rest using technologies such as AES-256 and offer detailed audit logs to monitor access and activity.

They also frequently integrate with centralized security information and event management (SIEM) systems for comprehensive threat detection and response.

Modern Applications of Batch Processing

Batch processing, despite its age, remains a vital part of modern computing. While interactive applications dominate our daily experience, many critical processes rely on the efficient, behind-the-scenes power of batch jobs. These processes often involve large datasets and require minimal human intervention, making batch processing the ideal solution.Batch processing continues to thrive because it excels at automating repetitive tasks and handling massive volumes of data efficiently.

This makes it particularly valuable in scenarios where speed, consistency, and cost-effectiveness are paramount. Many industries depend on it for core operations, and its integration with cloud technologies is expanding its reach and capabilities.

Industries Utilizing Batch Processing

Batch processing remains a cornerstone in several industries due to its ability to handle large-scale data processing and automation needs. For example, financial institutions use batch processing for nightly reconciliation of transactions, ensuring accuracy and compliance. Similarly, the healthcare industry relies on batch processing for tasks like medical billing, claims processing, and generating patient reports. The manufacturing sector uses it for inventory management, production scheduling, and quality control analysis.

In short, any industry dealing with large datasets and requiring automated, scheduled processing benefits from batch processing.

Reasons for Continued Relevance of Batch Processing

Several factors contribute to the persistent relevance of batch processing in today’s technological landscape. First, its inherent efficiency in processing large datasets remains unmatched by other methods. Second, batch processing minimizes human intervention, reducing the potential for errors and improving consistency. Third, it allows for optimized resource utilization, particularly during off-peak hours, resulting in significant cost savings. Finally, many legacy systems are built around batch processing, and the cost and complexity of migrating away from these systems often outweigh the potential benefits of switching to alternative methods.

Batch Processing in Legacy Systems vs. Cloud Environments

The application of batch processing differs slightly between legacy systems and cloud-based environments. In legacy systems, batch jobs are often scheduled and managed using on-premise infrastructure and specialized job schedulers. This approach can be inflexible and resource-intensive. In contrast, cloud-based environments offer greater scalability and flexibility. Cloud platforms provide managed services for scheduling and managing batch jobs, allowing for easier scaling of resources based on demand and automated failover mechanisms.

This enhanced scalability and cost-effectiveness are driving the adoption of cloud-based batch processing solutions. For instance, a large retail company might use a legacy system for its core inventory management but leverage a cloud-based batch processing service for analyzing massive customer transaction data, benefiting from the cloud’s scalability during peak seasons.

Future Trends in Batch Processing

Batch processing, while seemingly a relic of the past, continues to play a vital role in modern computing. Its inherent efficiency in handling large volumes of data makes it a persistent force, even in the face of real-time and cloud-based systems. However, ongoing advancements in technology are shaping the future of batch processing, leading to increased speed, scalability, and sophistication.The evolution of batch processing is inextricably linked to advancements in hardware and software.

Faster processors, increased memory capacity, and parallel processing capabilities all contribute to significantly improved performance. Software innovations, such as more efficient algorithms and optimized data structures, further enhance the speed and efficiency of batch jobs. These developments are not simply incremental improvements; they represent a qualitative shift in the capabilities of batch processing, enabling it to tackle increasingly complex tasks.

Increased Automation and Orchestration

Modern batch processing is moving beyond simple sequential execution. Sophisticated orchestration tools and workflow management systems are emerging, allowing for the automated chaining of multiple batch jobs, conditional execution based on data outcomes, and real-time monitoring of progress. For example, a large financial institution might orchestrate a series of batch jobs to process transactions, generate reports, and update databases, all seamlessly integrated and managed by a central system.

This level of automation reduces manual intervention, minimizes errors, and increases overall efficiency.

Cloud-Based Batch Processing

The rise of cloud computing has significantly impacted batch processing. Cloud platforms offer scalable, on-demand resources, allowing organizations to easily adjust their processing capacity based on fluctuating workload demands. This eliminates the need for large upfront investments in hardware and allows for more efficient resource utilization. Moreover, cloud services often integrate advanced features such as serverless computing, which automatically scales resources based on the needs of the batch job, further optimizing costs and performance.

Companies like Amazon Web Services (AWS) and Google Cloud Platform (GCP) offer robust cloud-based batch processing services, highlighting the growing importance of this approach.

Integration with Big Data and Machine Learning

Batch processing is becoming increasingly integrated with big data technologies and machine learning algorithms. The ability to process massive datasets efficiently is crucial for many machine learning tasks, and batch processing provides a highly effective approach. For instance, training a large language model often involves processing terabytes of data using distributed batch processing techniques. This synergy between batch processing and advanced analytics will continue to grow, enabling new applications in areas such as predictive modeling, fraud detection, and personalized recommendations.

Enhanced Security and Data Governance

With the increasing sensitivity of data processed in batch jobs, security and data governance are paramount. Future trends will see a greater emphasis on robust security measures, including encryption at rest and in transit, access control mechanisms, and audit trails. Advanced data governance frameworks will also play a crucial role in ensuring compliance with regulations such as GDPR and CCPA.

This will involve implementing tools and processes for data lineage tracking, data quality monitoring, and automated remediation of security vulnerabilities. The financial services sector, for example, already employs stringent security protocols for batch processes handling sensitive customer data.

Rise of Serverless Batch Processing

Serverless architectures are poised to significantly influence the future of batch processing. By abstracting away server management, serverless functions allow developers to focus solely on the code logic of their batch jobs. This approach offers automatic scaling, pay-per-use pricing, and reduced operational overhead, making it an attractive option for organizations of all sizes. The increased efficiency and reduced management burden contribute to a cost-effective and scalable solution for various batch processing needs.

This trend aligns with the growing adoption of microservices and event-driven architectures in modern software development.

Last Recap

From the clatter of punch cards to the hum of modern servers, batch operating systems have proven remarkably resilient. While interactive systems dominate the user experience today, batch processing remains a critical component of many large-scale applications, handling massive data processing tasks with unparalleled efficiency. Understanding its principles provides a crucial foundation for comprehending the evolution and future of computing.

So, the next time you encounter a large-scale data processing task, remember the power and enduring relevance of the batch operating system.

FAQ Section

What’s the difference between batch and interactive processing?

Batch processing submits jobs in a queue and processes them without direct user interaction, while interactive processing allows for real-time user input and feedback.

Are batch operating systems still used today?

Absolutely! They’re crucial for tasks like payroll processing, large-scale data analysis, and nightly backups – anywhere large volumes of data need processing without immediate user interaction.

What are some common JCL errors?

Common errors include syntax errors in JCL statements, incorrect file specifications, and insufficient resources (memory or disk space).

How does spooling improve I/O efficiency?

Spooling (Simultaneous Peripheral Operations On-Line) uses intermediate storage (like a disk) to buffer I/O operations, allowing the CPU to continue processing while I/O devices operate concurrently.

What security risks are associated with batch processing?

Risks include unauthorized access to data files, malicious code injection within batch jobs, and data breaches due to insufficient access controls.